Large Language Models (The use-case for RAG in Tanzania’s education system)

Can we spread the knowledge and enthusiasm for LLMs far and wide!

The goal here is to put knowledge into practice.

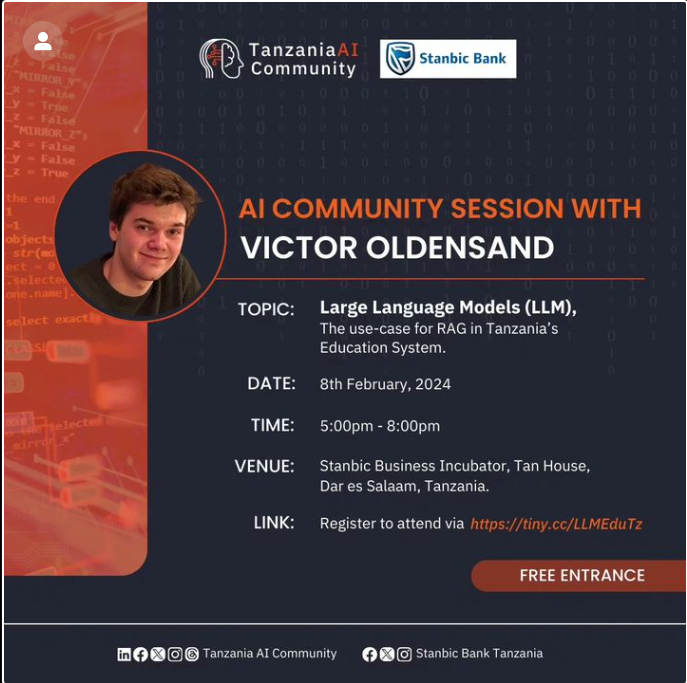

On the 8th of February, Tanzania AI Community hosted an extraordinary event centered on LLM (Large Language Models). If you missed out we've got you covered. Here's a rundown of the discussions we had.

First off, let's talk about the heart of the matter. What were the main topics on the table?

The event was expertly host Victor Oldensand, who shed light on the application of retrieval-augmented generation to enhance the competence of generative AI in supporting Tanzanian educators. I want to clarify that I don't claim ownership of the valuable insights he provided, and any mistakes in this article are solely mine, not his.

Let me share what I grasped from the presenter and how I attempted to translate that understanding into action through coding challenges and implementations. A heartfelt thank you to Victor for the wealth of experience and knowledge he shared with us. It was truly invaluable.

let's kickstart our summary by focusing on these key areas, just as our discussions unfolded.

Large Language Models(LLMs)

Retrieval Augmented Generation (RAG)

Evaluating RAG systems

Topics up there, are what was discussed, but here we'll skip some

AI in Education

Challenges and Ethics

and dive straight into the coding implementation of what was discussed. Let's roll up our sleeves and get coding!

what are Large Language models?

Large language models (LLMs) are deep learning algorithms that can recognize, summarize, translate, predict, and generate content using very large datasets. These models are based on transformer architecture and are trained using massive textual datasets, enabling them to understand and generate human language. Some examples of popular large language models include GPT-3 and GPT-4 from OpenAI, LLaMA from Meta, and PaLM2 from Google. These models have the shown potential to disrupt various industries and have been adopted for a wide range of applications.

What is Retrieval Augmented Generation (RAG)?

Retrieval-augmented generation (RAG) is a technique for enhancing the accuracy and reliability of generative AI models by retrieving facts from an external knowledge base to ground large language models (LLMs) on the most accurate, up-to-date information. It allows LLMs to build on a specialized body of knowledge to answer questions more accurately and provides users with insight into LLMs' generative process. RAG involves two phases: retrieval and content generation, and it extends the capabilities of LLMs to specific domains or an organization's specialized knowledge. The technique is used to optimize the output of LLMs so that they reference a knowledge base outside of their training data sources. RAG is implemented to ensure that the model has access to the most current, reliable facts and that users have access to the model’s sources, ensuring that its claims can be checked for accuracy and ultimately trusted. It also allows models to cite sources, like footnotes in a research paper, so users can check any claims, which builds trust. The technique can be used by nearly any LLM to improve the quality of LLM-generated content.

So how to Build an LLM application with RAG?

We will build a simple LLM application in Python using the LangChain library. Our RAG application will expand an LLM's knowledge using private data. In this case, it will be a PDF file containing some text. A doc on Ultrasonics in Physics.

1. Prerequisites

At the very beginning, we must install all required modules, that our application will use. Let’s write this command in the terminal in the project directory.

pip install langchain-community==0.0.11 pypdf==3.17.4 langchain==0.1.0 python-dotenv==1.0.0 langchain-openai==0.0.2.post1 faiss-cpu==1.7.4 tiktoken==0.5.2 langchainhub==0.1.14

Then create a ‘data’ directory and place the PDF file in it. We must also create a main.py file in the project directory, where we will store the whole code of our application.

The main file will look like this.

def main():

print("Everything will written over here!")

if __name__ == "__main__":

main()2. Load the PDF file into the application.

We will use a document loader provided by LangChain called PyPDFLoader.

from langchain_community.document_loaders import PyPDFLoader

pdf_path = "./data/Ultrasonics.pdf"

def main():

loader = PyPDFLoader(file_path=pdf_path)

documents = loader.load()

print(documents)

if __name__ == "__main__":

main()First, we should create an instance of the PyPDFLoader object where we pass the path to our file. The next step is to simply call the load function on this object and save the loaded file in the documents variable. It will be an array consisting of Document objects, where each of these objects is a representation of one page of our file.

The print() function should output an array similar to this:

[Document(page_content='[...]', metadata={'source': pdf_path, page: 1}), Document(page_content='[...]', metadata={'source': pdf_path, page: 2}), ...]

3. Splitting document into smaller chunks

We don’t want to send a whole document as a context with our query to the LLM. To split the document, we will use a class provided by LangChain called CharacterTextSplitter, which we can import from the Langchain library:

from langchain.text_splitter import CharacterTextSplitter

Then we can create an instance of it and call the split_documents() function, passing our loaded documents as a parameter.

def main():

loader = PyPDFLoader(file_path=pdf_path)

documents = loader.load()

text_splitter = CharacterTextSplitter( chunk_size=1000, chunk_overlap=50, separator="\n" )

docs = text_splitter.split_documents(documents)

Let's briefly describe what's going on here.

First, we are creating a CharacterTextSplitter object, which takes several parameters:

- chunk_size - defines the maximum size of a single chunk measured in tokens.

- chunk_overlap - defines the size of overlap between chunks. This helps to preserve the meaning of the split text by ensuring that chunks are not split in a way that would distort their meaning.

- separator - defines the separator that will be used to delineate our chunks.

In the docs variable, we will get an array of Document objects - the same as from the load() function of the PyPDFLoader class. But this time, this array will contain more elements because we have split them.

4. Prepare environment variables and API Key to store it there

The next step will be converting these chunks into numeric vectors and storing them in a vector database. This process is called embedding, we will talk about embedding next time, so we won't go into detail about it now.

For the embedding process, we need an external embedding model. We will use OpenAI embeddings for this purpose. To do that, we have to generate an OpenAI API key.

But before that, we have to create a .env file where we will store this key.

Now, we need to create an account on the platform.openai.com/docs/overview page. Afterward, we should generate an API key on the platform.openai.com/api-keys page by creating a new secret key.

Copy the secret key and paste it into the .env file like this:

OPENAI_API_KEY=your_key

paste your openai key on "your_key"

Okay, let’s load environment variables into our project by importing the load_dotenv function:

from dotenv import load_dotenv

And call it at the very beginning of the main function:

def main():

load_dotenv()

loader = PyPDFLoader(file_path=pdf_path)

documents = loader.load()

text_splitter = CharacterTextSplitter( chunk_size=1000, chunk_overlap=50, separator="\n" )

docs = text_splitter.split_documents(documents)

5. Implementing the embedding process

First, we have to import the OpenAIEmbeddings class:

from langchain_openai import OpenAIEmbeddings

Then we should create an instance of this class. Let’s assign it to the 'embeddings' variable like this:

embeddings = OpenAIEmbeddings()

6. Setting up local vector database - FAISS

We have loaded and prepared our file, and we have also created an object instance for the embedding model. We are now ready to transform our chunks into numeric vectors and save them in a vector database. We will keep all our data locally using the FAISS vector database. Facebook AI Similarity Search (Faiss) is a tool designed by Facebook AI for effective similarity search and clustering of dense vectors.

First, we need to import the FAISS instance:

from langchain_community.vectorstores.faiss import FAISS

And implement the process of converting and saving embeddings:

def main():

load_dotenv()

loader = PyPDFLoader(file_path=pdf_path)

documents = loader.load()

text_splitter = CharacterTextSplitter( chunk_size=1000, chunk_overlap=50, separator="\n" )

docs = text_splitter.split_documents(documents)

embeddings = OpenAIEmbeddings()

vectorstore = FAISS.from_documents(docs, embeddings)

vectorstore.save_local("vector_db")

We have added two lines to our code. The first line takes our split chunks (docs) and the embeddings model to convert the chunks from text to numeric vectors. After that, we are saving the converted data locally in the 'vector_db' directory.

7. Creating a prompt

For preparing a prompt we will use a 'langchain' hub. We will pull a prompt called 'langchain-ai/retrieval-qa-chat' from there. This prompt is specially designed for our case, allowing us to ask the model about things from the provided context. Under the hood, the prompt looks like this:

Answer any use questions based solely on the context below:

<context>

{context}

</context>

Let’s import a hub from the 'langchain' library:

from langchain import hub

Then, simply use the 'pull()' function to retrieve this prompt from the hub and store it in a variable:

retrieval_qa_chat_prompt = hub.pull("langchain-ai/retrieval-qa-chat")

8. Setting up a large language model

Great. The next thing we'll need is a large language model - in our case, it will be one of the OpenAI models. Again, we need an OpenAI key but we have already set up it along with the embeddings, so we don't need to do it again.

Let's go ahead and import the model:

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

And assign it to a variable in our main function:

llm = ChatOpenAI()

9. Retrieve context data from the database

Okay, we have finished preparing the vector database, embeddings, and LLM (large language model). Now, we need to connect everything using chains. We will need two types of chains provided by 'langchain' for that.

The first one is the 'create_stuff_documents_chain,' which we need to import from the 'langchain' library:

from langchain.chains.combine_documents import create_stuff_documents_chain

Next, pass our large language model (LLM) and prompt to it.

combine_docs_chain = create_stuff_documents_chain(llm, retrieval_qa_chat_prompt)

This function returns a Runnable object, which requires a context parameter. Running it will look like this:

combine_docs_chain.invoke({"context": docs, "input": "What is piezo effect?"})

10. Retrieve only the relevant data as a context

Generally, it will work, we need to pass only the information related to our query as the context. We will achieve this by combining this chain with another one, which will retrieve only the chunks important to us from the database and automatically add them as context to the prompt.

Let's import that chain from the 'langchain' library:

from langchain.chains import create_retrieval_chain

First, we need to prepare our database as a retriever, which will enable semantic search for the chunks that are relevant to our query.

retriever = FAISS.load_local("vector_db", embeddings).as_retriever()

So, we load our directory where we store the chunks converted to vectors and pass it to an embeddings function. In the end, we return it as a retriever.

Now, we can combine our chains:

retrieval_chain = create_retrieval_chain(retriever, combine_docs_chain)

Under the hood, it will retrieve relevant chunks from the database and add them to our prompt as context. All we have to do now is invoke this chain with our query as an input parameter:

response = retrieval_chain.invoke({"input": "What is piezo effect?"})

As a response, we will receive an object with three variables:

- input - our query;

- context - an array of documents (chunks) that we have passed as context to the prompt;

- answer - the answer to our query generated by the large language model (LLM).

Let’s print out the "answer" property:

print(response["answer"])

Our printed answer looks as follows:

The piezo effect is a phenomenon in which certain materials, such as quartz, tourmaline, and Rochelle salt, generate an electric charge when subjected to pressure or mechanical stress. This means that if pressure is applied to one pair of opposite faces of a crystal, electric charge develops on the other pair of opposite faces. The piezo effect is used in piezo-electric generators or oscillators to produce ultrasonic waves.

Looks pretty nice :)

10. You’ve made it! Our LLM app is ready

We have extended the knowledge base of the LLM model with data from an Ultrasonic.pdf file. The model is now able to answer our questions based on the context that we have provided in the prompt.

Below is the whole piece of code.

from dotenv import load_dotenv

from langchain import hub

from langchain.chains import create_retrieval_chain

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain_community.document_loaders import PyPDFLoader

from langchain.text_splitter import CharacterTextSplitter

from langchain_openai import OpenAIEmbeddings, ChatOpenAI

from langchain_community.vectorstores.faiss import FAISS

pdf_path = "./data/Ultrasonics.pdf"

def main():

load_dotenv()

loader = PyPDFLoader(file_path=pdf_path)

documents = loader.load()

text_splitter = CharacterTextSplitter(

chunk_size=1000, chunk_overlap=50, separator="\n"

)

docs = text_splitter.split_documents(documents)

embeddings = OpenAIEmbeddings()

vectorstore = FAISS.from_documents(docs, embeddings)

vectorstore.save_local("vector_db")

retrieval_qa_chat_prompt = hub.pull("langchain-ai/retrieval-qa-chat")

llm = ChatOpenAI()

combine_docs_chain = create_stuff_documents_chain(llm, retrieval_qa_chat_prompt)

retriever = FAISS.load_local("vector_db", embeddings).as_retriever()

retrieval_chain = create_retrieval_chain(retriever, combine_docs_chain)

response = retrieval_chain.invoke(

{"input": "What is piezo effect"}

)

print(response["answer"])

if __name__ == "__main__":

main()11: More Tips.

● Data pipeline for RAG try (LlammaIndex)

● Vector database for rapid prototyping try (ChromaDB)

● And there are many open source models found on Hugging Face

● TruLens for RAG evaluation

Well, let me tell you, We delved into various aspects of LLMs and the RAG concept, exploring its potential. Even if you couldn't make it to the event, don't worry – we've got some insightful nuggets to share.

Stay tuned as we unpack the essence of our discussions, providing valuable insights for AI enthusiasts, whatever place you will be, or anywhere else in Tanzania just build and that's what I was inspired to share.

I can't wait to see what you are building next.

For any feedback and chat, let's connect: Mgasa Lucas

Until next time, stay safe.