4 Popular Natural Language Processing Techniques

This article will demonstrate a few widely used natural language processing techniques.

Natural language processing (NLP) is a subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. Source: Wikipedia

It is most likely that you have used NLP in one or another way. If you have ever tried to contact a certain business through messages and got an immediate reply, probably it was NLP at work, or perhaps you have just gotten home from work, filled your cup with coffee, and asked Siri to play some relaxing seaside sounds. Without a doubt, you apply NLP.

Human language is very complex, filled with sarcasm, idioms, metaphors, and grammar to mention a few. All of these make it difficult for computers to easily grasp the intended meaning of a certain sentence.

Take an Example of a Sarcasm conversation:

John is sewing clothes while closing his eyes.

Martin: John, what are you doing, you're going to hurt yourself.

John: No I won't ,After a few moments, John accidentally injects himself with the needle.

Martin: well, what a surprise.

With Natural Language Processing(NLP) techniques we can break down human texts and sentences and process them so that can understand what's happening. In this article, we are going to learn with examples about the most common techniques and how they're applied, we will look on;

- Sentiment Analysis

- Text Classification

- Text Summarization

- And Named Entity Recognition

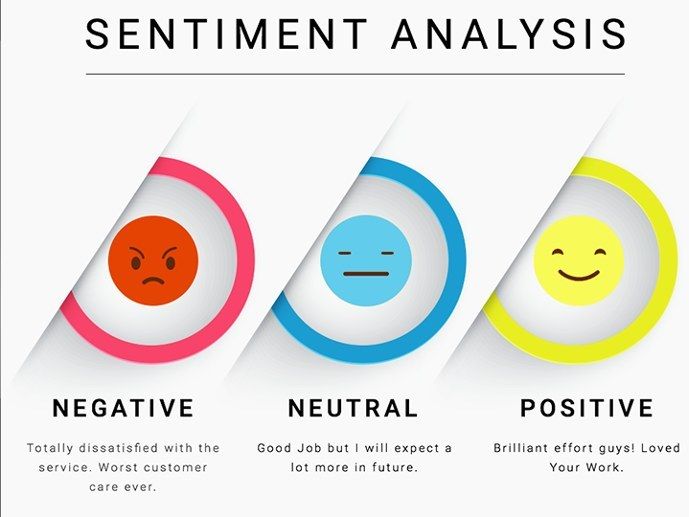

Sentiment Analysis

Most businesses want to know what are the customer's feedback concerning their services or products. But you might find millions of customers' feedback. Analyzing everything is very painful and boring even if you are offered a large amount of money when you accomplish that. Sentiment analysis can be useful in this situation.

Sentiment Analysis is a natural language processing technique which is used to analyse positive, negative or neutral sentiment to textual data.

Businesses use sentiment analysis to even determine whether the customer's comment indicates any interest in the product or service. Sentiment analysis can even be further developed to examine the mood of the text data (sad, furious, or excited).

Use case

To accomplish this, let's use the hugging face transformers library. We are going to use a pre-trained model from hugging face models called "distilbert-base-uncased-finetuned-sst-2-english"

# let's first install transformers library

$ pip install transformers

Once the library is installed, completing the task is quite simple.

>>> from transformers import pipeline

>>> analyser = pipeline("sentiment-analysis")

The above code will import the library and use a default pre-trained model to perform sentiment analysis.

>>> user_comment = "The product is very useful. It have helped me alot."

>>> result = analyser(user_comment)

>>> print(result)

# Output: [{'label': 'POSITIVE', 'score': 0.9997726082801819}]

The output shows that the sentiment of the user's comment is POSITIVE and the model is 99.9772% sure.

Text Classification

Text classification also known as text categorization is a natural language processing technique which analyses textual data and assigns them to a predefined category.

Spam emails occasionally arrive in your mailbox. When you click on one of these links, your computer may become infected with malware. Therefore, practically all email service providers employ this NLP technique to classify or categorize the email as either spam or not.

To effectively categorize your incoming emails, text classifiers are trained using a lot of spam and non-spam email data.

Use case

Let's try to create a simple text classifier to classify whether the text we input is spam. We are going to use the TextBlob library to achieve this.

Let's create some training data to train our own classifier.

train = [

('Congratulation you won a your prize', 'spam'),

('URGENT You have won a 1 week FREE membership in our 100000 Prize Jackpot', 'spam'),

('SIX chances to win CASH From 100 to 20000 pounds ', 'spam'),

('WINNER As a valued network customer you have been selected to receive 900 prize reward', 'spam'),

("Free entry in 2 a weekly competition to win FA Cup final tickets 21st May 2005. Text FA to 87121 to receive", 'spam'),

('I do not like this restaurant', 'no-spam'),

('I am tired of this stuff.', 'no-spam'),

("I can't deal with this", 'no-spam'),

('he is my sworn enemy!', 'no-spam'),

('my boss is horrible.', 'no-spam'),

('This job is bad', 'no-spam')

]

Now, let's import our classifier from the TextBlob library and train it with our created training data. We are going to use NaiveBayesClassifier

>>> from textblob.classifiers import NaiveBayesClassifier

>>> classifier = NaiveBayesClassifier(train)

After our training is complete (which might take less than two seconds according to your computer), we will input our text to see if it works.

>>> classifier.classify("Congratulation you won a free prize of 20000 dollars and Iphone 13")

# Output: 'spam'

Our simple model correctly identified our message as "spam," which it is.

Text Summarization

Text summarization is a natural language processing technique for producing a shorter version of a long piece of text.

Let's say you are tirelessly resting on your bed and receive a message from your boss to read a certain document sent to you. when you check the document, you find that it spans 10 pages. Text summarization might be a revolutionary idea for you.

Let's imagine that when you are drowsily sleeping, your boss sends you a message telling you to read a specific document. The document is ten pages long when you check it. For you, text-summarization might be a ground-breaking concept.

Text summarization models often take the most crucial information out of a document and include it in the final text. However, some models go so far as to try to explain the meaning of the lengthy text in their own words.

Use case

For this, we'll also make use of the transformers library.

>>> from transformers import pipeline

Then we are going to use the "summarization" pipeline to summarize our long text.

>>> summarizer = pipeline("summarization")

# If the you don't have the summarization model in your machine, It will be downloaded from the internet.

The lengthy text can then be copied and pasted from anywhere for summarization.

>>> long_text = """

The Solar System is the gravitationally bound system of the Sun and the objects that orbit it. It formed 4.6 billion years ago from the gravitational collapse of a giant interstellar molecular cloud. The vast majority (99.86%) of the system's mass is in the Sun, with most of the remaining mass contained in the planet Jupiter. The four inner system planets—Mercury, Venus, Earth and Mars—are terrestrial planets, being composed primarily of rock and metal. The four giant planets of the outer system are substantially larger and more massive than the terrestrials. The two largest, Jupiter and Saturn, are gas giants, being composed mainly of hydrogen and helium; the next two, Uranus and Neptune, are ice giants, being composed mostly of volatile substances with relatively high melting points compared with hydrogen and helium, such as water, ammonia, and methane. All eight planets have nearly circular orbits that lie near the plane of Earth's orbit, called the ecliptic.

"""

# You can set an optional parameter of max_length to maximum number of words you want to be outputted

>>> summarizer(long_text, max_length=80)

# Output: [{'summary_text': " The Solar System formed 4.6 billion years ago from the gravitational collapse of a giant interstellar molecular cloud . The vast majority (99.86%) of the system's mass is in the Sun, with most of the remaining mass contained in the planet Jupiter . The four inner system planets are terrestrial planets, being composed primarily of rock and metal ."}]

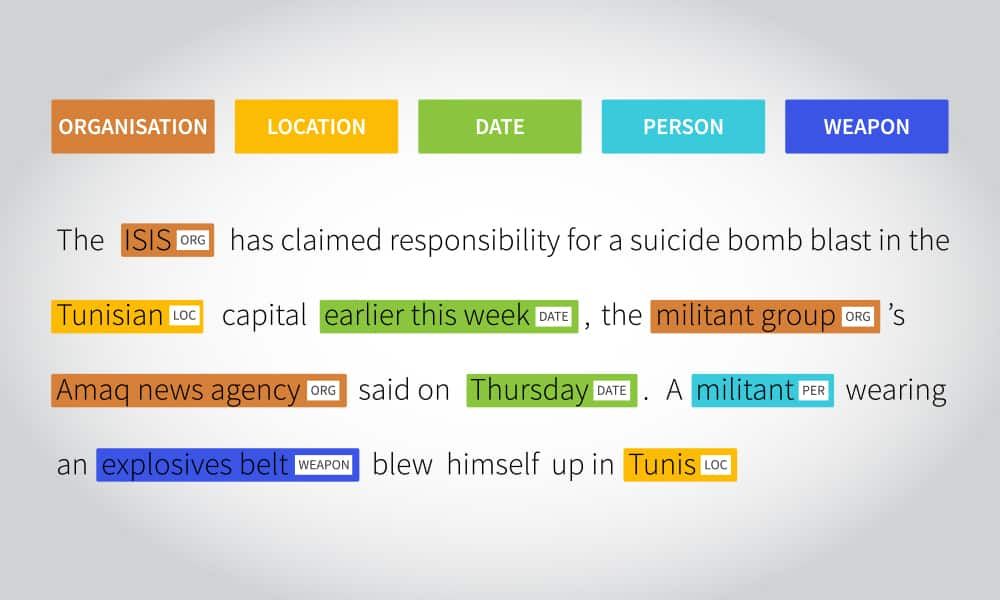

Named Entity Recognition(NER)

Have you ever heard fanciful tales about how a particular firm listens in on all calls, chats, and online interactions to see what people are saying about it? Well, "if" this is true, one of their strategies might be named entity recognition. Because NER is a natural language processing technique that identifies and classifies named entities in text data.

Named entities are just real-world objects like a person, organization, location, product, etc. NER models identify ‘Dar-es-salaam’ as a location or ‘Michael’ as a man's name.

Use case

We will use the SpaCy library for this task. We need to install it and download an English pre-trained model to help us to achieve our task faster.

$ pip install -U spacy

# Then downloading the model

python -m spacy download en_core_web_sm

We are going to import the spacy library and then load the model we downloaded so we can perform our task.

import spacy

nlp = spacy.load("en_core_web_sm")

After loading our model, we can simply input our text and spacy will give us named entities present in our text.

doc = nlp("The ISIS has claimed responsibility for a suicide bomb blast in the Tunisian capital earlier this week.")

for ent in doc.ents:

print(ent.text, ent.label_)

spacy.displacy.render(doc, style="ent")

# Output: ISIS ORG

# Tunisian NORP

# earlier this week DATE

The output shows different entities detected by spaCy with their respective labels.

NOTE: If you didn't understand the meaning of an abbreviation in spaCy, you can use spacy.explain() to explain its meaning.

# Let's say you didn't understand the meaning of an abbreviation "ORG"

spacy.explain("ORG")

# Output: 'Companies, agencies, institutions, etc.'

The good news is that it's simple to get started with these techniques nowadays. Large language models like Google's Lamda and GPT3 are available to aid in NLP tasks. You may easily construct helpful Natural language processing projects with the use of tools like spaCy and hugging face.

Thanks.